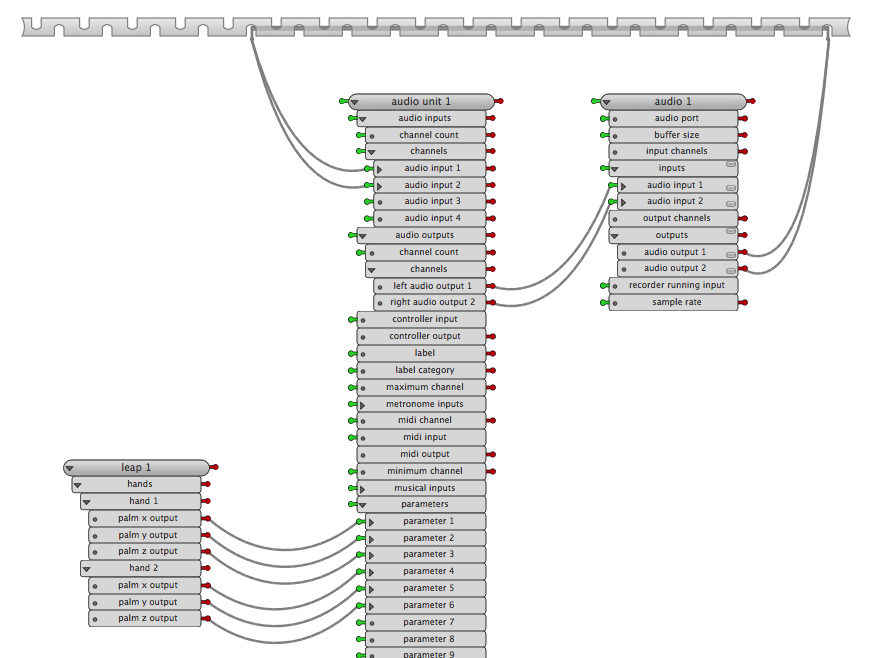

Yesterday afternoon I took a few hours to write an EigenD agent for the Leap. Currently it only supports sending out the x, y and z axis of two palms relative to the Leap device itself. The API is very intuitive and it shouldn’t take long to gradually add support for hand directions and fingers, I wanted to first play around with it for a while though.

In this experiment I control two effects in Native Instruments’ Guitar Rig 5. It’s processing one of my songs that’s playing from iTunes and you can hear the recorded result. I did have to manually align the audio to the video, so that might not be perfect.

This developer version of the Leap device supports a little bit less range and a restricted field of view, which is why it’s sometimes missing some detection at natural boundaries. The production versions will not suffer from this.

The song is ‘Never Lose’ from my album ‘Dream Like a Tree‘.

0 Responses

Stay in touch with the conversation, subscribe to the RSS feed for comments on this post.