A few months ago I set out to replace my MIDI guitar performance environment with a more flexible and intuitive solution. For various reasons I ended up using our own Eigenharp software, EigenD, even though I never intended to. One of the things that struck me over other solutions was that software synths felt much nicer to play. I always used to feel very disconnected from the musical performance and with my new setup this wasn’t as much the case anymore. I never had time to analyze why exactly, until research I did last week revealed a critical lack of MIDI time-stamping support in most plugin hosts and DAWs.

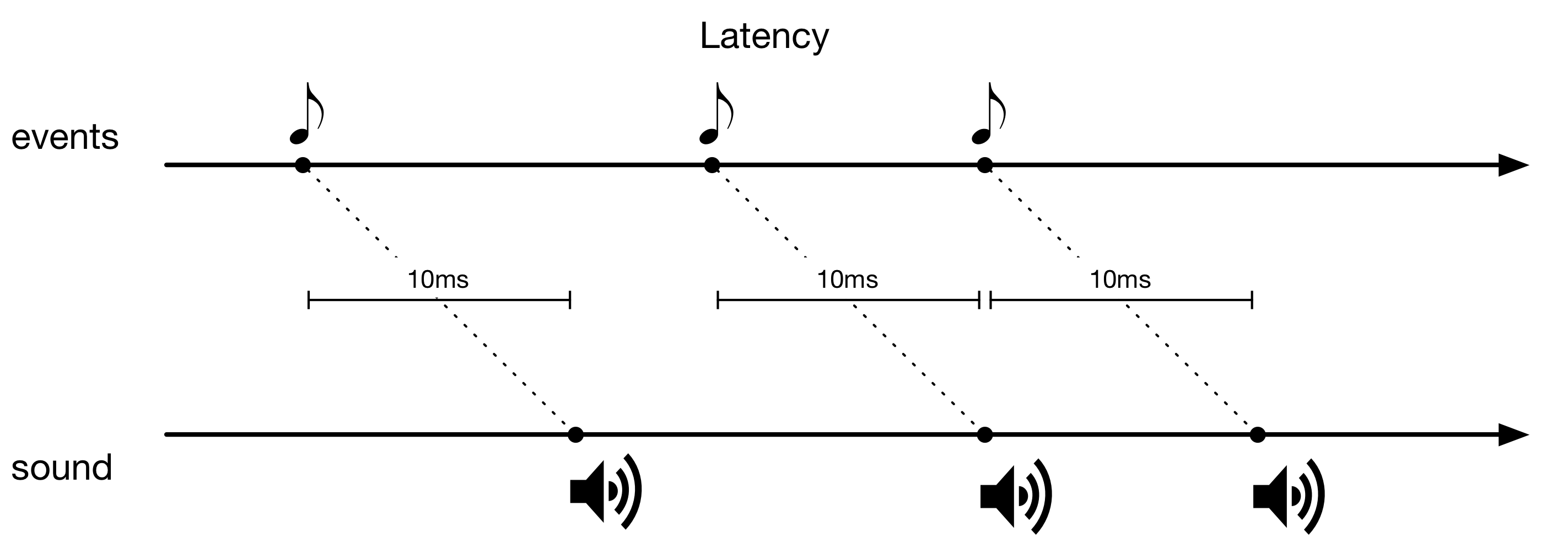

Let’s first get some basic terminology out of the way. Most timing aspects of electronic music can be described through latency and jitter.

What is latency?

Latency is a short constant delay that is introduced between the occurrence of an event and the actual perception of the audio.

Interestingly, musicians are extremely good at dealing with latency because it’s predictable and already present all around us. The simple fact that audio transits through air introduces latency. Hence, anybody that plays in a band naturally compensates for latency, resulting from the different positions of other band members on stage or in the rehearsal room. Pipe organists are possibly the most extreme example of our capacity to deal with latency, due to delays of up to hundreds of milliseconds caused by the physics of the pipes and the spaces they’re played in.

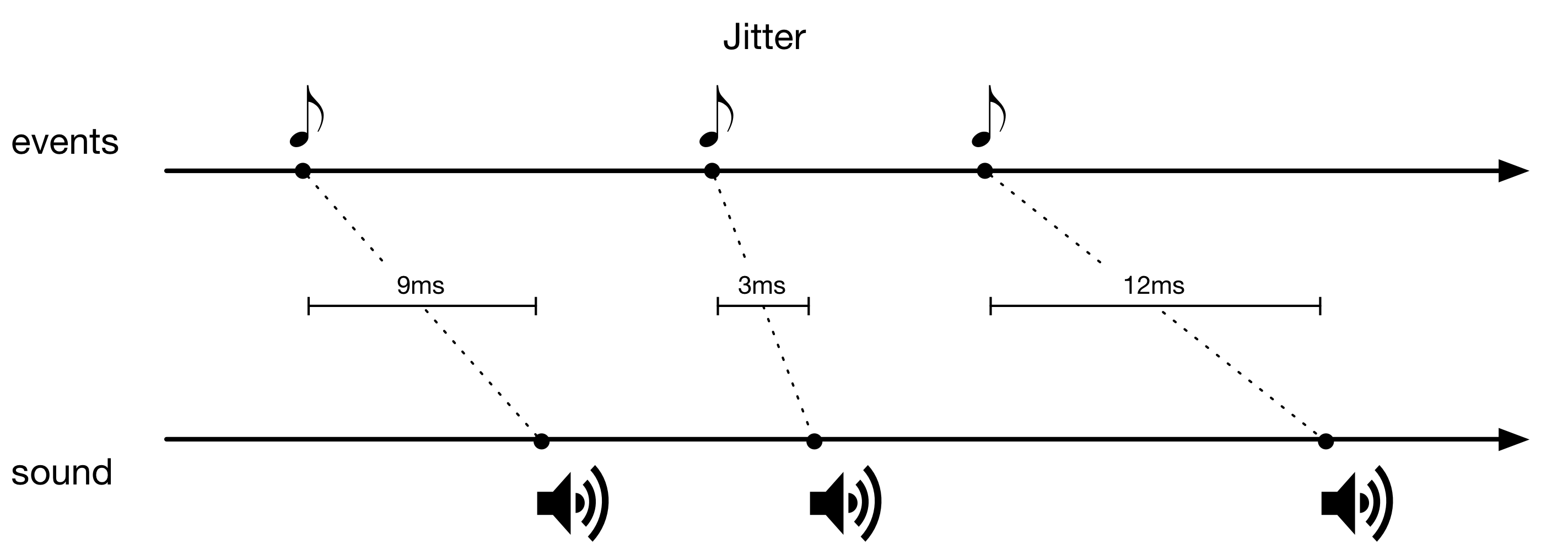

What is jitter?

Jitter on the other hand describes a deviation from a steady signal and makes evenly spaced events come out scattered.

Contrary to latency, jitter is very disconcerting for a musician as it’s not constant and the timing of sound unpredictably changes from event to event: sometimes a note might be heard almost immediately but at other times it’s heard with a varying delay that can’t be anticipated. At extreme levels, jitter makes it impossible to perform at all, but at lower variations of a few milliseconds, everything just feels off and you never connect with the music you’re playing, without really being able to tell why. Academic studies have indicated that jitter becomes noticeable between 1 and 5ms, with a weighting around 2-3ms (Van Noorden 1975, Michon 1964, and Lunney 1974). Note that these tests only measured the perception of jitter while listening, not while performing. Intuitively I expect performing musicians to be even less tolerant to it.

Just as with latency, jitter can be introduced at many stages of your gear, it can be the clocking of an audio interface, the performance of a computer, the reliability of cables and much more. It turns out though that even if you’re using good quality hardware that minimizes these timing problems with rock steady clocks, many software instrument hosts don’t use MIDI event timestamps and cause the generated audio to be quantized to sample buffer boundaries, introducing jitter at the final step.

Let’s wrap up the terminology with a quick recap of sample rate and buffer size.

Sample rate and buffer size

You probably know that digital audio is created by generating samples at a certain frequency, usually at 44.1kHz. This means that every second, 44100 sample values are calculated. These are then converted to the analogue domain by a D/A converter and result in sound coming from headphones or from loudspeakers.

Since computers need time to calculate this data, software instrument hosts use one or several internal buffers of typically 256 or 512 samples. These buffers, combined with the latency of the hardware and drivers, is what people are talking about when they refer to the latency of software instruments. At a sample rate of 44.1kHz, a buffer of 512 samples provides the computer with 11.6ms (1000ms * 512 / 44100) to perform the calculations.

Essentially, the software is always running a bit behind to give it time to come up with the next batch of data, which the audio driver will periodically ask for. If this data isn’t ready in time, you’ll start hearing crackles and either need to increase the sample buffer size, reduce the sample rate or give the software instruments less work to do by, for example, reducing polyphony.

MIDI buffer timing

All this is pretty straightforward and intuitive, it gets a bit more complicated though when MIDI comes into play.

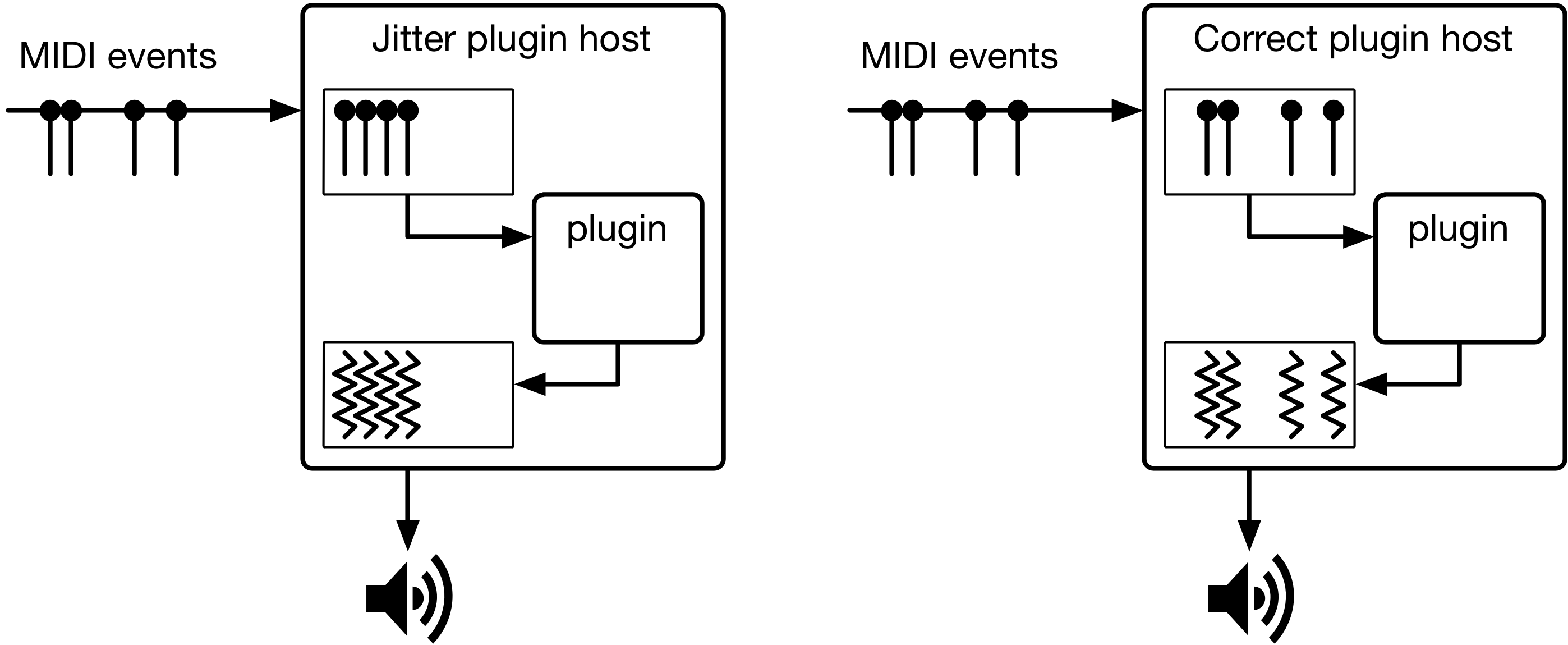

The timing of the MIDI data is completely unrelated to the audio stream, it’s up to the host to correlate them. MIDI events merely arrive as reliably as possible at the host who subsequently has to pass them on to the audio plugin. Again, the host uses an internal buffer to store the MIDI data that arrived while the plugin was calculating a batch of samples. When it’s time to calculate the next batch, the host simply passes the buffer of MIDI data to the software plugin so that it can calculate the corresponding audio.

It’s here that additional MIDI jitter can set in. If the host doesn’t remember when the MIDI events arrived while storing them in a buffer, the software plugin can only assume that they started at the beginning of the sample buffer it’s generating and that they followed each-other, one by one, without any delays in between. Since everything is snapped to the beginning of a sample buffer, this effectively applies a quantization effect to the MIDI events with the duration of the sample buffer size. For a buffer of 512 samples at 44.1kHz, the audio is then quantized at intervals of 11.6ms.

Obviously, this means that the timing of the musical performance is lost and that the generated audio will unpredictably be timed differently for each event, hence introducing jitter.

Only some plugin hosts and DAWs behave correctly in this regard.

Real world implications

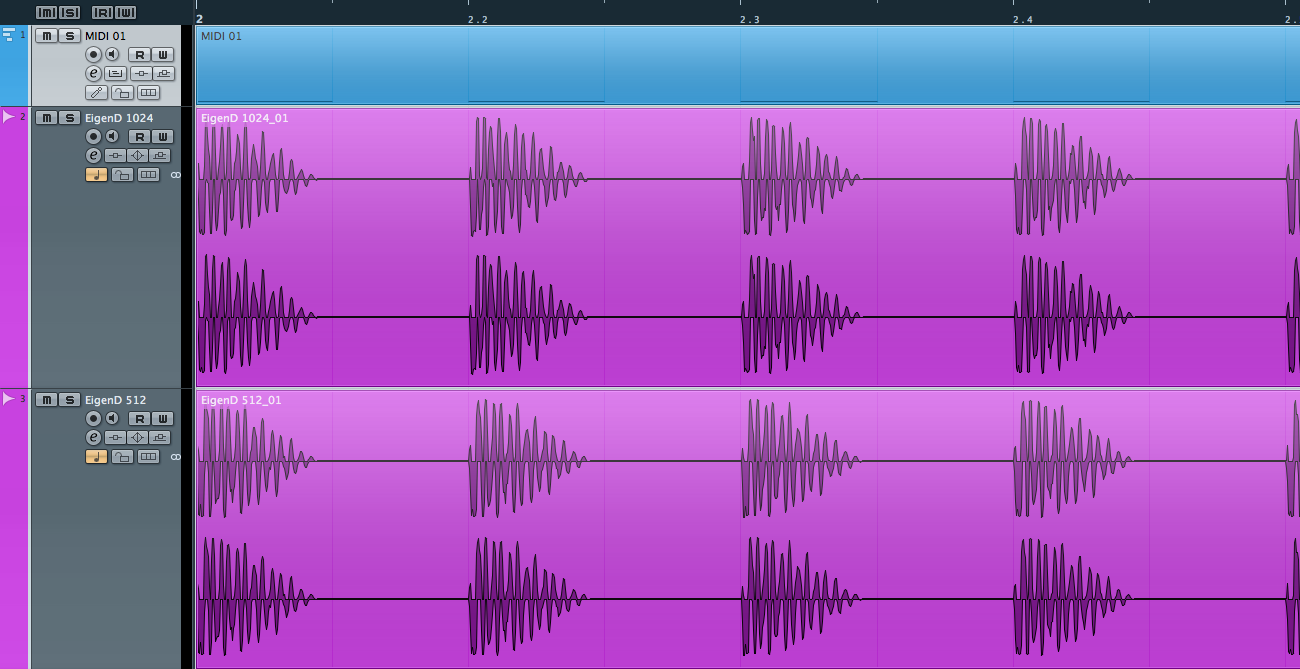

While it’s interesting to theorize about the implications of MIDI buffer jitter, it’s much more useful to look at a concrete example.

I set up a test environment in Cubase 6 on MacOSX, using the sequencer to play a constant stream of eight notes at 180BPM. The MIDI messages were sent over the local IAC MIDI bus towards various plugin hosts. In each host I set up Native Instruments Absynth with a percussive snare sound. The audio output of the host was digitally sent through my Mac’s built-in audio output towards my Metric Halo ULN-2 firewire interface over an optical cable, and finally recorded back into Cubase as an audio track. I tested four different sample buffer sizes for each host: 1024, 512, 256 and 128, all at 44.1kHz. When all the tracks were recorded, I aligned the start of the first recorded waveform with a bar line in Cubase so that all the other waveforms could be checked for timing by looking at the vertical lines of the beats.

Surprisingly I found that only about a third of the hosts behaved correctly and that many popular choices for live performance introduced MIDI buffer jitter. Note that this doesn’t say anything about how these hosts behave when playing back MIDI from their internal sequencer through software instruments, this is handled differently and lies not within the scope of this analysis.

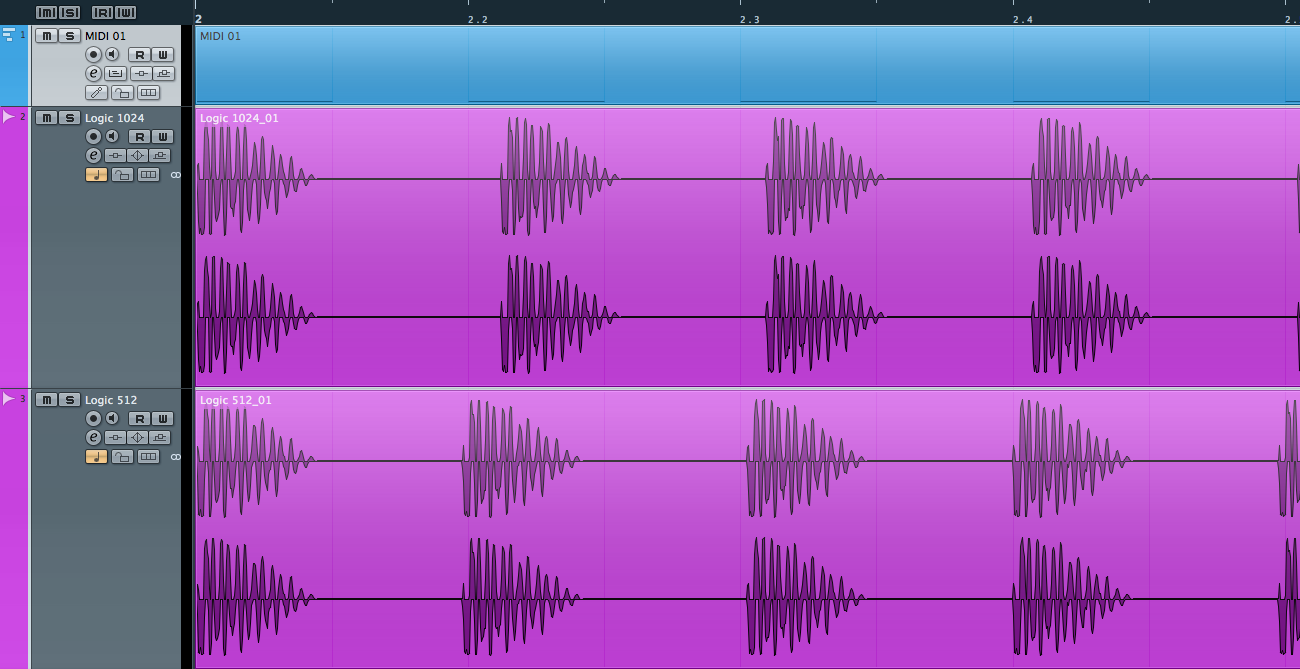

Below are two screenshots of the results in Cubase, the first of Eigenlabs EigenD’s correct timing and the second of Apple Logic Pro with jitter.

You can see that the timing of the audio is all over the place in Logic, the beginning of each wave form is supposed to line up with the vertical beat lines. The screenshot shows that it is clearly deviating from these and based on the previous explanations, the maximum jitter with a buffer of 512 samples is 11.6ms. As sample buffer sizes are reduced, the jitter reduces also and a buffer of 128 samples would only have maximum 2.9ms of MIDI buffer jitter. The latter might be acceptable to some, but it’s not considered beneath the perceptible limits.

The following table lists the hosts I tested and the categories they fall into. It might be possible that there are preferences that can be tweaked for each host. I approached them with default settings, doing the minimal amount of work to get the test environment to work. The actual project files I created can be downloaded as a zip file. If you are experienced with this behavior in one of the problematic hosts and know how to fix it, please let me know and I’ll make the required changes here and add an addendum with host-specific instructions.

| MIDI buffer jitter | Correct MIDI timing |

|---|---|

| AudioMulch 2.2.2 | |

| AULab 2.2.1 (Apple) | |

| Bidule 0.9727 (Plogue) (*1) | |

| Cubase 6.5.4 (Steinberg) | |

| Digital Performer 8.01 (MOTU) | |

| DSP-Quattro 4.2.2 | |

| EigenD 2.0.72 (Eigenlabs) | |

| Jambalaya 1.3.4 (Freak Show Enterprises) | |

| Live 8.3.4 (Ableton) | |

| Logic Pro 9.1.8 (Apple) | |

| Mainstage 2.1.3 (Apple) | |

| Maschine 1.8.1 (Native Instruments) | |

| Max/MSP 6.0.8 (Cycling 74) | |

| Rax 3.0.0 (Audiofile Engineering) | |

| Reaper 4.3.1 (Cockos) | |

| StudioOne 2.0.7 (Presonus) | |

| Vienna Ensemble Pro 5.1 (VSL) | |

| VSTLord 0.3 |

Host specific instructions

- Bidule: the MIDI section in the preferences dialog contains a ‘Reduce MIDI Jitter’ option that only kicks in for new MIDI device nodes that are either created manually or by reloading the project. Applying the preferences and keeping the host running without intervening on the graph doesn’t change this behaviour.

Final words

There are many technical challenges when playing live with software instruments. A low-latency, jitter-free, steadily clocked hardware setup with great audio converters is indispensable. However, on the software side it’s also important to pick a solution that preserves the reliability of your hardware from end to end. Many of today’s top choices for live plugin hosting introduce a non-neglectable amount of MIDI jitter that is bound to degrade your musical performance. It’s important to be wary of this and select your software solution with the same care as your hardware.

Clarifications (last changed on December 6th 2012)

Based on some questions and remarks from websites and forums, here are a few clarifications:

- This article talks about live performance of MIDI that’s streamed in from an external source and is sent in real-time to the hosted plugin, it doesn’t talk about the internal sequencer of the hosts mentioned. These findings are mostly important in the context of electronic musical instruments where MIDI is played by a musician and the sound is generated by software instruments from within plugin hosts or DAWs.

- Plugin developers have confirmed me that they actually do get zero sample offsets when receiving live MIDI from the problematic hosts (see the comments below), they get proper timing when playing back from the internal sequencer. This confirms my findings in the article.

- The point here is not about what the listener hears, it’s about what you feel as a musician and the consistency in terms of response. Jitter is subtly unpredictable, meaning that you feel much less connected with the music you’re playing live, which obviously bleeds into the whole musician feedback loop that gets you into an inspirational flow.

- The tests were perfectly reproducible, I ran them at least three times for each host and the results were always exactly the same.

- The behaviour isn’t dependent on the VST or AudioUnit plugin that’s used. I tested quite a few of them and even some built-in instruments for certain DAWs, the findings were each time consistent with what’s described in the article.

- I only showed the results of the correct and the wrong situation, one of each, since there’s nothing in between. There’s either MIDI buffer jitter or not, the results of the products that fall in the same category are analogous to the screenshots that I posted and it would be an awful lot of duplication to post them all.

- I performed the alignment of the audio samples manually by zooming in to sample precision and snipping the clip at the first recorded non zero sample. I then zoomed back out and used bar boundary snapping in Cubase to align the start of the snipped clip with the beginning of the bar.

- The local IAC ‘in the box’ chain isn’t skewing the results, the signal chain was exactly the same for each test, I merely switched out the final plugin host. Actually, I performed the same tests with a second machine using MIDI over gigabit ethernet and MIDI over USB through an iConnectMIDI interface. The behaviour was exactly the same.

- For those interested, I added a download with the full-sized screenshots, the recorded audio and Cubase 6.5 project files of the displayed test results from EigenD and Logic.

Acknowledgements

This article was written and researched by Geert Bevin in December 2012. Thanks to Mike Milton, Roger Linn, John Lambert, Jim Chapman, Duncan Foster and Robin Fairey for proof reading and suggestions.

References

Lunney, H. M. W. 1974. “Time as heard in speech and music.” Nature 249, p. 592.

Michon, J. A. 1964. “Studies on subjective duration 1. Differential sensitivity on the perception of repeated temporal intervals.” Acta Psychologica 22, pp. 441–450.

Van Noorden, L. P. A. S. 1975. Temporal coherence in the perception of tone sequences. Unpublished doctoral thesis. Technische Hogeschool, Eindehoven, Holland.

I know you said you didn’t do any tweaking of options But did you enable “Overdrive” in Max? That option is not on by default but highly recommended for any intensive MIDI processing as it causes the Max scheduler to run in interrupt mode rather than as a low priority thread?

Yes actually, I did turn overdrive on in Max before testing.

And Sonar?

What about other popular DAWs such as FL Studio and Adobe Audition?

I only tested DAWs and plugin hosts that I could use on MacOSX since my whole audio environment is set up for that. With the testing process I described it should be quite trivial to do your own tests of your favourite host and contribute back what your findings are. Also, I had to limit myself to those products that I either had a license for or that I could download a demo version of.

Did you test Logic with one of its built-in instruments instead of using Absynth? Maybe the AudioUnit version of Absynth isn’t respecting MIDI event timestamps, which Logic does provide to plug-ins.

cheers

– Taylor Holliday (Audulus Developer)

Yes, I tested it with EVP88 Electric Piano also I think, not 100% though since I’ve been through a lot of iterations. I’m using the AU version of Absynth in all the other tests also apart for Cubase, it works fine for the hosts that provide sample offsets for the events in the MIDI buffers.

The thing is that Logic also provides them, when playing back from the sequencer. However it doesn’t seem to do so for live incoming MIDI data.

Just tried again with EVP88, yes definitely happening. However when I record the MIDI inside Logic, then play the recorded MIDI back and record the audio, the MIDI buffer jitter is gone. It’s really the live-performance part that’s broken.

You’re absolutely right: for live input the MIDI frame-offset is always 0. How unfortunate!

cheers

– Taylor

Thanks a lot for confirming this on your end. I was going to write a simple test plugin that logs the MIDI data, don’t have to do that now.

What I find even more unfortunate is that Mainstage behaves the same and is solely geared towards live performance!

Geert, I know this is completely off topic, but I’m having lots of trouble setting up my external synths with Logic Pro 8. Are there any tutorials on here, or someone I can bounce questions off of. MIDI doesn’t seem to be coming in, and sometimes it won’t go from logic out. Help!

Sorry, I can’t help you with that. There are many forums on the internet for these kind of question, I’m pretty sure someone there can lend you a hand to figure your problem out.

I’ve been testing latency and jitter forever going back to hardware days. You hit it on the head. Timing can be awful with software instruments. The issue goes even deeper. Sequencers themselves can have a hard time generating a steady midi pulse and recording midi accurately. Beyond testing instrument latency and jitter I’ve also tested the midi output of hardware and software sequencers by playing out a steady midi pulse and recording the midi stream directly (not an instrument output but the midi pulse itself is recorded). This is done through a midi hardware out so I can’t generalize to internal midi streams but your comments suggest that there is some correlation. I found the midi out playback from EVERY sequencer on WIndows and OSX is flawed sometimes moderately sometimes awful. Each sequencer has a characteristic pattern it seems. And with cross platform sequencers you surprisingly see exactly the same data on both OS’s and with different interfaces and drivers so midi interface issues seem to not be at play. Some of the worst offenders, Ableton Live; really awful timing with midi. There really is no best but Reaper does fairly well. Where can you find great midi timing? Get an amiga or atari computer; they are dead accurate – an order of magnitude better. One other thing; if you run your software instruments through a Muse Receptor it has fabulous timing. I have test Receptor 1 and 2 and found the timing to be near perfect in response to midi. So that would be an avenue to pursue for anyone wanting software instruments played back with accurate timing. Also check out Innerclock Systems – http://www.innerclocksystems.com/index.html as they have a couple of pages devoted to timing performance of hardware sequencers.

Geert has identified an important aspect of software response to MIDI. However the article largely ignores the hardware side of the equation.

As Jon says, there are solutions to the problem of bad MIDI hardware. Innerclock is one; others are:

http://expert-sleepers.co.uk/es4.html

Sample accurate MIDI playback

http://www.kvraudio.com/news/advanced-pro-gear-releases-the-midi-bridge-120-no-jitter-midi-recording-interface-17694

Solution for *recording* MIDI without hardware-introduced jitter

Thanks for the midi bridge link. I had not seen that one but it looks interesting.

I spoke too soon. Looks like the company is gone. Interesting idea though.

One reason Muse receptor are different when it comes to jitter and timing in general might be that those devices are based on linux. I run all my audio and midi gear under Linux (using a low-latencey kernel) and have not hade jitter and timing problems that I used to have back in the days I ran windows based audio/midi.

Wow, thanks for the detailed article. I probably get away with the jitter in Logic by tracking with a 32-sample buffer. And I play slowly 🙂

But now you have me thinking about Live once more…

I expect hordes of Apple lawyers and fanboys coming here to tell you that you’re “measuring it wrong” 😉

Hey I could be measuring it wrong. I’ve developed a method that seems to yield consistent and reproducible results but I’m always open to other ideas. The best would be for everyone to measure what they got so we can pool results. The more testing the better we can see what is really going on in the sequencer vs instrument vs. other factors. I’ve been thinking about starting a site focusing on all of this and trying to build a community of people testing their stuff. It would be great if this kind of data was routinely part of the specs for sequencers, instruments, etc.

sooo waht to do i get thise Jitter ?

I’d like to see a similar article on jitter arising from the hardware scanning rate. It is an important aspect of controller performance and one where (I presume) the Eigenharps excel since they use a 2Khz scanning rate.

Hi Geert, I just noticed that studio one has a “ignore midi timestamps” check box.

was this unticked when you did the studio one test? cheers.

I seem to remember I tried both with and without.

oh ok… I was hoping it would work proper without… thanks for letting me know 🙂

It would be most useful to measure the amount of jitter for each buffer size, for each host software. I would be particularly interested in the numbers for Mainstage since that’s what I use.

Also wondering what amount of jitter is your cut-off between right & left columns of your table.

With my main instrument being a Roland guitar synth I have bigger issues to deal with, but this is a really interesting article nonetheless.

That’s all irrelevant, there’s no cut-off amount of jitter in this case. There’s either MIDI buffer jitter in the host or not. If not, it’s sample correct and perfect, if there is it fluctuates all the time between 0ms and the time that corresponds to the buffer size (11.6ms for 512 samples at 44.1kHz).

OK right – makes sense. Thanks for the article.

From a professional point of view, Its inacceptable

Personnally, im in rage

As a musicien, im insulted…

Will you be able to test Logic Pro X anytime soon? Not that I believe they got that sorted out

I don’t plan on buying Logic Pro X, so I can’t test it.

This article proves, what I also experience for two decades now, that classical MIDI cannot be used to control acoustic instruments precisely enough. I also doubt if a MIDI buffering is the solution. For one instruments it might work, but large amount of data will cause more and more delay and also the bandwidth will be exceeded. I regret that there has not been a quicker MIDI standard.

From an electronis discussion board in germany I learned that MIDI is to be redefined new and new options have also been showed at NAMM 2013, while there had been proposal for a 1MBit MIDI already a decade ago like proposed here: http://www.96khz.org/oldpages/enhancedmiditransmission.htm

You need to move Studio One out of the group that suffers from Midi Jitter. It was fixed at version 2.5. One the devs actually informed us.

I ran the test the same way you did using Logic to generate the eighth notes. I was running an FM7 plugin with a very fast transient DX7 patch as the VST. I recorded it all back into Logic and saw no variations in the leading adge of the waveforms lining up perfectly on the grid. Presonus must have jumped onto this pretty quick.

Very interesting stuff though, thanks for the efforts you put in.

Leave studio one in the jitter group please, In my test it shows jitter all over the place.. Jeff.. did you zoom in super small? cos that’s when you see the jitter.

I forgot to mention it was studio one 2.6.. plenty of jitter

Out of interest, do you know whether Pro Tools as affected by MIDI jitter, or whether it would be in the right hand column?

Cheers,

Mike

Sorry Mike, I don’t have ProTools, so I can’t check

So the thing is, timestamps are not used on all hosts while handling live input. Timestamps are recorded onto the midi track while recording and then the sequencer can use them to play back exactly on time. If you’re using a good midi interface, it timestamps the events when it receives them, very accurately..and that timestamp is sent to the DAW and recorded on the track by all of the big name sequencers for sure. And when you play back the track it should sound as you played it.

However the rub is that while you’re actually recording that track, there can be inconsistent delays between when you hit the note and when you hear the sound. This has nothing to do with the timestamps, this has to do with the computer’s inability to consistently process midi stuff. Midi is coming in through USB usually and the OS buffers that stuff up and allows the app to process it in a very much non-real time kind of way. The DAW will receive batches of midi data in clumps from the OS, which got it from the USB driver. The DAW can only tell the soft instrument to make sound when it finally gets those clumps of notes. Those clumps of notes will have the individual notes timestamped and they should be stored correctly on the track when you record it, but what you hear as you’re recording it will be jittery crap. Timestamps have nothing to do with it.

Some apps may be more efficient at others at prioritizing the collection of midi data. In the old days, Win95 could actually be made to have very tight timing because there was a way to “thunk” the midi events through to the program…sort of force it in. But newer versions of Windows dissallowed that, giving total control to the OS for when to service clumps of midi data from the midi device driver. OSX is marginally better because Apple put more thought into CoreMidi and prioritizing midi events, while Microsoft barely recognizes midi at the lower levels of the operating system. But still, there is now way around the fact that USB midi can do this. I find firewire midi devices to be MUCH more responsive and use them whenever I can for this reason.

As to why you had some success with Reaper and a few hosts, while not much success with Logic, DP and other big name products, its hard to say exactly, but lots of people have been trying to solve this problem for a very long time and basically its not easy to solve, or else they would have already. Back in the day I used to use Cubase on the PC and its midi timing was horrendously terrible. At some point they all decided that had to build timestamping technology into the actual midi interfaces, so make sure you have a midi interface that has that. That won’t help your real time jitter, but at least recorded tracks will be on time. For live performance the best they can do is try to keep the DAW out of the way of midi as much as possible. Prioritize processing of midi. Things have gotten better in recent years, its totally possible to play live gigs with soft instruments…but…its still no comparison to hardware and that is the main reason why

Hi Geert

Do you have any comment on Dewdman42’s post, just above?

He is suggesting that while jitter does impact the realtime performance element (ie – when you play a track, it’s subject to jitter during the performance), but that if the MIDI interface logs a timestamp for each MIDI event, the jitter issue doesn’t impact playback, and that the jitter will be eliminated at the recording stage and hence not reproduced when the track is played back.

That sounds like it’s not the same as your findings, but I wanted to get your thoughts? My understanding from your study was that jitter does indeed affect live performance, but that it is also reproduced by the DAW when the track is played back. You also commented that if the part is not recorded live (for example if it’s drawn manually into the DAW), that jitter is not an issue.

Really keen to hear your perspective on this aspect!

Cheers,

Mike

Just adding to my comment: this article by SOS suggests that it’s only the live version that suffers jitter, but that the recorded MIDI data will be exactly as you play it:

http://www.soundonsound.com/techniques/solving-midi-timing-problems

Quote from the article:

“If you’re struggling with jittery MIDI timing problems when playing soft synths ‘live’, it may be due to an entirely separate issue that affects quite a few sequencers on both Mac and PC platforms, including Cubase, Logic, Reaper and Sonar, amongst others. Your performance will be captured exactly as you played it, and will also play back exactly the same, but since you hear jittery timing when actually playing, it makes it more difficult to play consistently in the first place.”

Does that line up with your findings?

Cheers and thanks!

Mike

Yes, that’s what I found in this article many years ago, i.e. that many hosts don’t properly offset the MIDI messages according to their time stamps within the audio buffer when playing live. My article does not talk about recorded MIDI, it talks about jitter during live musical performance.

Thanks for your answer on this, Geert.

I am glad to know that this only affects the sound output in real time, but that no matter the DAW, the MIDI data that’s recorded isn’t subject to jitter.

I am able to comfortably operate Logic at a sample buffet of 64 samples, so that will minimise jitter from a performance perspective. However, until I correctly understood your article, I had thought that the MIDI data that was recorded was also subject to the effects of jitter.

Thanks again and all the best,

Mike